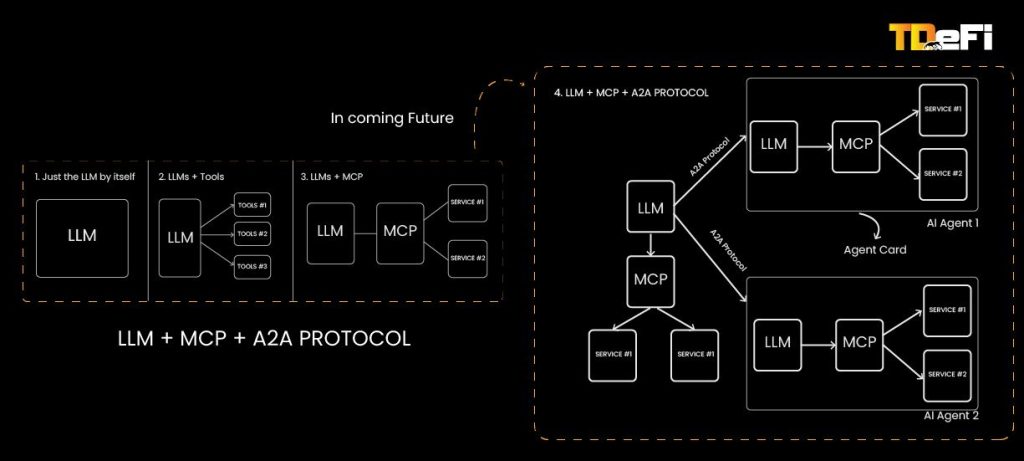

AI agents are becoming powerful collaborators, but you know what even the smartest language model (LLM) on its own is limited. By itself, an LLM is basically just predicting the next chunk of text; it can’t truly take actions or access live data without help . To be meaningful in real-world tasks, AI agents need to communicate – both with external tools/data and with each other. This is where two emerging open protocols come into play: Anthropic’s Model Context Protocol (MCP) and Google’s Agent-to-Agent (A2A). These protocols are foundational for the next wave of AI, focusing on making communication more efficient and secure. In simple terms, MCP and A2A provide the “glue” that connects AI agents to the world around them, transforming isolated AI into truly useful assistants.

In the sections that follow, we’ll dive deeper into each protocol, see how they work, and why they matter. We’ll explore Anthropic’s MCP first – how it standardizes tool integration for AI (making it easier to plug an LLM into various apps). Then we’ll examine Google’s A2A – how it enables cross-agent communication and coordination. Along the way, we’ll look at real-world examples that show these protocols in action. Finally, we’ll bring it all together with a fresh example of a Web3 investment platform using MCP as a tool layer and A2A for agent teamwork, illustrating how both protocols complement each other in a practical scenario.

Anthropic’s Model Context Protocol (MCP)

Anthropic’s Model Context Protocol (MCP) is an open standard introduced in late 2024 to address a big challenge: connecting AI assistants with the data, tools, and context they need in a consistent way. In essence, MCP defines how an application (like an AI agent or chatbot) should fetch information or invoke tools, so the AI isn’t stuck in a vacuum. Anthropic likes to describe MCP as a “USB-C port for AI applications”  – a universal connector between AI models and the outside world. Just as USB-C standardized how we plug in devices (same cable for your phone, laptop, etc.), MCP standardizes how AI agents plug into different data sources and services.

Why is this important? Before MCP, if you wanted your AI to use some external resource (say, fetch a document, query a database, or call an API), you often had to write custom integration code or use bespoke frameworks for each case. It was like having a bunch of dongles – one for each tool. This approach is fragile and hard to scale: every new data source requires yet another custom connector. Frameworks like LangChain tried to help, but still often ended up with one-off connectors per system. MCP changes the game by offering a universal protocol. Developers can write to one standard, and any MCP-compliant AI client can talk to any MCP-compliant data/tool server without custom code for each integration. It’s analogous to how REST APIs standardized web services communication  – MCP aims to do the same for AI tool and context integration .

From a business perspective, MCP’s value is huge: it makes it faster and easier to build AI-powered applications because you don’t have to reinvent the wheel for each new integration. It also future-proofs your AI systems – if tomorrow you switch your AI model or add a new data source, you can plug into it via MCP rather than writing a whole new pipeline. This flexibility (even to switch between different LLM providers) and built-in best practices for security are core promises of MCP. In short, MCP helps ensure AI assistants have the right context at the right time, reliably and securely.

How MCP Works: The Process Flow

The MCP Client (AI side): This is typically your AI application or agent (for example, the Claude AI assistant, a specialized AI tool, or any app that uses an LLM). The client is like the driver initiating a trip, deciding where to go to get info.

The MCP Server (tool/data side): This is a lightweight service that exposes a specific data source or functionality to the AI in a standardized way. Think of each server as a different “destination” or service – one might be a server for database queries, another for cloud documents, another for an API like weather or Jira. Each MCP server speaks the same language/protocol, even if it connects to different underlying systems  .

Google’s Agent-to-Agent (A2A) Protocol

Now let’s shift from one agent talking to tools, to agents talking to each other. Google’s Agent-to-Agent (A2A) protocol is a newly launched open standard (late 2024) designed to enable AI agents to interoperate and collaborate across different systems. Where MCP gives an agent access to data and actions, A2A lets multiple agents securely exchange information and coordinate actions, even if they were built by different vendors or live in different applications. The motivation is clear: as companies deploy more AI agents (for customer support, IT automation, analytics, etc.), the real magic happens when these agents can team up. A2A is about creating a common language and set of rules so that an agent from Company A can “talk” to an agent from Company B (or simply two different internal agents that didn’t know about each other beforehand) to get a job done together.

Google introduced A2A with a strong emphasis on enterprise scenarios. Imagine an office environment: one agent handles your calendar, another manages your customer database, another monitors network security. Traditionally, these operate in silos. With A2A, they could collaborate – e.g., the calendar agent asks the security agent for building access logs when scheduling a meeting with a visitor, or a sales agent pulls info from a finance agent for a client proposal. The key is interoperability: A2A is built so that agents, even if created by different providers (and there are many: OpenAI, Anthropic, custom in-house agents, etc.), can work together if they follow A2A. It’s like setting a universal protocol for “AI agent conference calls” – any invitees speaking the A2A language can join the conversation.

How A2A Works and What It Enables

So, what does A2A actually do? At its heart, A2A defines a protocol for a client agent and a remote agent to interact productively. The client agent is the one initiating a task (think of it as the requester or coordinator), and the remote agent is the one acting on that task (the responder or specialist). For example, your “project manager” agent might be the client that asks a “calendar” agent (remote) to schedule a meeting. This interaction under A2A involves a few key elements:

Capability Discovery: How do agents know which agent can do what?

A2A introduces an “Agent Card” – essentially a JSON profile in which an agent advertises its skills and endpoints . If Agent A wants something done, it can query a directory or network of agents, retrieve their Agent Cards, and figure out who can help. For instance, an agent card might say “I am CalendarBot, I can schedule events, check availability, require authentication X.” This is analogous to a business card or a service registry description. It’s crucial because it means agents don’t need prior hard-coded knowledge of each other; they can discover capabilities dynamically.

Task Messages with Lifecycle: Agents communicate by sending tasks to each other. A task is a structured message (could include text and even file data) describing what needs to be done. Each task has a unique ID and goes through a lifecycle – created, in-progress, completed. The protocol ensures that for long-running tasks, the agents can send updates (status notifications) so they stay in sync. When the remote agent completes the task, it returns a result (often called an “artifact” in A2A terms – basically the output of the task). This could be a piece of data, a confirmation that an action was taken, or any result of the requested operation.

What does all this enable in practice? It means you can have a team of specialized AI agents that coordinate as a workflow. Each agent can focus on what it’s best at, and A2A handles the communication plumbing so they can jointly fulfill complex requests. For instance, Google gave an example of a hiring scenario: within a single interface, a hiring manager’s main agent could use A2A to enlist a recruiter agent to find candidate resumes, then ask a scheduler agent to set up interviews, and later a background-check agent to verify credentials. The person just sees the end-to-end process happening, but behind the scenes multiple agents (with different data access and skills) cooperated.

Another important aspect is that A2A is framework-agnostic. It’s not only for Google’s AI or Anthropic’s or OpenAI’s – it’s meant to be an open protocol any agent can implement. This allows, say, an agent built on OpenAI’s platform to communicate with another built on Google’s, as long as both speak A2A. It’s akin to how anyone can send an email to anyone else because SMTP is a universal standard, no matter what email software each side uses.

In summary, A2A provides the missing piece for multi-agent systems: a common language for inter-agent communication, with built-in means for discovering who can do what, managing tasks between agents, and keeping communications secure and structured. Google and its partners see this as key to scaling autonomous workflows in enterprises – where instead of one monolithic AI trying to do everything, you have an orchestra of AI agents, each playing its part in harmony via A2A.

Real-World Examples of MCP and A2A

Atlassian’s Rovo Agents: Cross-Platform Collaboration with A2A

Atlassian, the company behind workplace tools like Jira and Confluence, has been investing in Rovo – an AI agent platform aimed at improving productivity in teamwork settings. With multiple agents operating in a suite of tools, Atlassian saw the need for them to coordinate actions across Jira, Confluence, and other systems. This is a textbook case for A2A. By adopting the Agent-to-Agent protocol, Atlassian’s Rovo agents can seamlessly communicate and collaborate on tasks in a secure, standardized way .

A practical use case Atlassian envisions is agents autonomously coordinating project timelines and resources across distributed teams – one agent might gather status updates, another adjusts timelines, another prepares summary reports – all negotiating via A2A. This could dramatically reduce the manual overhead in project management. The result is a more fluid, connected experience in tools like Jira and Confluence, where routine updates happen automatically and consistently.

A Web3 Investment Platform Example: Using MCP and A2A

To make things even more concrete, let’s walk through a hypothetical (but very plausible) scenario that uses both MCP and A2A. Imagine a Web3 investment platform – a decentralized app where users can manage crypto assets, research projects, and execute trades. We want this platform to have AI agents that can assist users and also coordinate behind the scenes to provide a smooth experience. Here’s how it could work:

Setting the stage: On this platform, you (the user) have an AI Portfolio Advisor agent. This agent’s job is to help you make investment decisions – e.g., give insights on token performance, alert you to risks, and execute trades with your approval. There’s also an AI Compliance & Risk agent in the system, which watches for security red flags or compliance issues (important in Web3 where scams and hacks are risks). Additionally, there’s a Market Data agent that specializes in real-time crypto market info, and maybe even an External News agent that summarizes relevant news from crypto forums or Twitter. We’ll see how MCP and A2A come into play with these characters:

This example showcases MCP as the integration layer for tools/data, and A2A as the collaboration layer for agent teamwork. The clear separation means each protocol does what it’s best at: MCP makes an agent more capable individually, and A2A makes the collective more powerful than the sum of its parts. This kind of architecture could be applied to many domains beyond Web3 – think healthcare (an AI doctor agent consulting an AI pharma database agent and an AI insurance agent), or smart cities (traffic control agent talking to weather agent and public transport agent), and so on. It’s a flexible, scalable vision for agent-driven ecosystems.

Conclusion

MCP and A2A may just be buzzwords for now, but they represent a significant shift in how we build AI systems. By standardizing the way agents connect to tools (MCP) and how agents talk to each other (A2A), these protocols are laying the groundwork for a more interoperable, collaborative AI future. The impact is already being felt: developers can build AI solutions faster by leveraging MCP’s plug-and-play integrations, and organizations are exploring dynamic workflows where multiple AI agents coordinate via A2A to achieve business goals more efficiently.

There’s also a significant growth opportunity here: businesses that adopt these protocols early can create AI services that plug into larger networks . Talking about valuations of the companies that successfully used these tech are cursor with $10 Bn valuation and Anthhropic itself with $61.5 Bn with a recent E Round of $3.5 Bn closed.

From a future-looking perspective, MCP and A2A are likely just the beginning. They set a foundation for agent-driven ecosystems where AI agents can discover each other, share context, and even negotiate or form ad-hoc teams to solve problems. We can expect these protocols to evolve (and new ones to emerge) as more real-world usage teaches us what’s needed. The good news is both Anthropic and Google have open-sourced these efforts and involved community partners, so they are building with input from many stakeholders – which bodes well for broad adoption.

To summarize, understanding MCP and A2A gives us a glimpse into the next era of AI. It’s an era where AI is not a single chatbot in a box, but an ecosystem of cooperating agents, each with access to the tools it needs and the peers it can rely on.